Assistant professor of bioengineering Jia Liu received his Ph.D. in physical chemistry from Harvard in 2014 and then completed postdoctoral research at Stanford from 2015 to 2018. He joined the School of Engineering and Applied Sciences (SEAS) faculty in 2019. His research focuses on the development of soft bioelectronics, cyborg engineering, genetic and genomic engineering, and computational tools for addressing questions in areas including brain-machine interfaces, neuroscience, cardiac diseases, and developmental disorders.

In November, the Liu Lab received the EFRI award from National Science Foundation (NSF)’s Emerging Frontiers in Research and Innovation program.

What’s the Liu Lab?

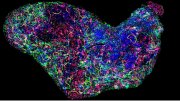

“The Liu Lab at SEAS [Harvard’s School of Engineering and Applied Sciences] operates at the cutting edge of bioelectronics, genomics, neuroscience, tissue engineering, and artificial intelligence. Our mission is to develop advanced flexible bioelectronic technologies that seamlessly integrate with biological systems—particularly human tissues and organs—to tackle complex challenges in biology, medicine, and computation. We focus on creating flexible bioelectronic devices, multimodal spatial biology tools, and AI-driven systems to interface with the brain and other organ systems, driving breakthroughs in understanding cellular dynamics and treating a range of disorders.

“Our work bridges fundamental science and translational engineering. These goals are realized through SEAS’s unique non-departmental boundary structure, which allows us to bring experts in bioengineering, electrical engineering, chemistry, bioinformatics, computer science, materials science, and mechanical engineering to work together.”

What is your past research, and what are you currently working on with your team?

“Current artificial intelligence (AI) and machine learning (ML) technologies do not match biological systems in terms of adaptability, continuous learning, and computational efficiency. We previously developed flexible and stretchable electronics, which can adapt to the morphological change [that occurs] during tissue development.

“Integrating these electronics with stem cell-derived brain organoids as ‘cyborg organoids,’ they can continuously monitor and control brain organoid activity. This grant research aims to further integrate brain organoids with these bioelectronics to create a bio-symbiotic system capable of complex, long-term computational tasks.”

What are nanoelectronics? How can biotechnology, including brain-computer interfaces, help people?

“Nanoelectronics involves the study and application of electronic devices and systems at the nanometer scale. In our lab, we utilize nanoelectronics to develop ‘tissue-like’ bioelectronic tools that can seamlessly interact with biological systems at the cellular level, enabling long-term tracking and modulation of cellular activities while minimizing tissue damage and immune responses to foreign bodies.

“Biotechnology, including brain-computer interfaces (BCIs), holds transformative potential to improve human health and quality of life. BCIs enable direct communication between the brain and external devices, offering hope to individuals with neurological conditions. For instance, BCIs can help restore motor function in patients with paralysis by translating neural signals into movements via robotic limbs or other assistive technologies. Similarly, for neurological disorders such as epilepsy or depression, bioelectronics can monitor and modulate neural activity to restore healthy brain function.”

What’s your favorite part of the research process?

“My favorite part of the research process is the transformation of a challenging idea into something tangible—when a concept evolves from a sketch on paper to a functional prototype or a validated experiment in the lab. It’s incredibly fulfilling to see these ideas come to life and contribute to advancing knowledge or solving real-world challenges.

“I also find the collaborative aspect of research deeply rewarding. Brainstorming with students and colleagues from diverse backgrounds, tackling complex questions together, and seeing the unique perspectives each team member brings to the table is immensely inspiring. These interactions often spark unexpected discoveries and innovative solutions. For my senior students, I often encourage them to explore research directions that can surprise me—and they often accept this challenge and produce extremely innovative ideas.

“Finally, I take great satisfaction in translating research into practical applications through collaboration with doctors and startups. Knowing that the work we do in the lab has the potential to improve lives—whether by developing new implantable devices, advancing brain-computer interfaces, or creating tools to enhance human capabilities—continues to motivate and energize me in this field.”

What is the most exciting thing you’ve worked on so far, and what’s the most exciting thing you’re hoping to work on?

“The brain is an incredibly dynamic system, with neural activity that never stops changing. Capturing this constant change is challenging without stable recording setups. In our recent study, we developed tissue-like flexible nanoelectronics to achieve stable neural recordings over several months, revealing that neural dynamics from the same neurons are constantly changing, even under consistent sensory input.

“We hypothesize that this continuous change, called neural representational drift, is closely linked to the brain’s ability to perform lifelong learning—a significant hurdle in AI due to ‘catastrophic forgetting,’ where old knowledge deteriorates as new tasks are introduced.

To test this, we developed DriftNet, a novel framework for deep neural networks inspired by neural representational drift. DriftNet introduces external noise, organizing solutions into task-specific groups for future retrieval. Our experiments show that DriftNet not only significantly outperforms conventional networks that lack drift but also surpasses other state-of-the-art lifelong learning methods. We successfully scaled DriftNet and tested it on two Large Language Model (LLM) architectures, GPT-2 and RoBERTa, demonstrating its robust, cost-effective, and adaptive approach to equipping LLMs with lifelong learning capabilities.”