What if a cardiac surgeon could operate on a beating heart without opening the patient’s chest? Or a flexible robot could navigate the delicate branching of blood vessels, or bronchi in the lungs, and then stiffen to perform surgery at its tip? Or a magnetic field could be engineered to drive a plaque-clearing robot inside a person’s arteries?

These kinds of innovations are already in the vanguard of the field of medical robotics, says professor of surgery Pierre Dupont, a leading designer of robotic systems for use in healthcare. “I thought of going into medicine instead of engineering,” he admits, “so when I had the chance to combine the two, it was a fantastic opportunity.” The field encompasses precision instruments that can be deployed by doctors inside the human body for visualization, diagnosis, and treatment, but also patient-focused inventions, from handheld devices that let diabetics control their blood sugar to wearable robots that help stroke patients walk again.

Not all these achievements will make it from the lab. But gradual trends are emerging: toward increasing autonomy for the robots themselves, and greater personalization for users, whether as patients or providers of healthcare.

Dupont’s pediatric surgical research lab, populated mostly by postdoctoral fellows and graduate students, is based at Boston Children’s Hospital. That’s where he learned about the 1 in 3,000 children born each year with a detached esophagus. “Their throat ends in a closed pouch,” he says, “and the stomach has a little stub that is also closed.” If the gap between them is more than several centimeters, sewing the two ends together will not work—“the sutures will tear out.” Children with this severe form of the condition—there are about 15 to 20 each year at his hospital—“typically come in at 3 months old for a repair.” The repair procedure requires them to be paralyzed and sedated in intensive care for weeks, as the surgeon gradually tightens the sutures, millimeter by millimeter, to stimulate tissue growth. Eventually, the two ends grow long enough to be reconnected.

But “What is happening to the child’s brain?” Dupont wondered when he first heard about the procedure, “all that time” in the ICU?” While some researchers have focused on trying to grow living tissue in a lab that could be used in a repair, Dupont realized, “If you have some remaining tissue inside the body that still has the right properties, then by simply using a robot to apply traction forces in the appropriate way, you can stimulate that tissue to grow—to elongate—and thus produce the working length of tissue that you need.” The suture-tightening remedy relies on the same principle, but is “a gruesome thing.”

Dupont decided to design a tube-like robot that could temporarily bridge the gap and simultaneously apply traction forces to both ends of the detached esophagus, in order to stimulate growth while allowing the child to be awake and mobile. When tested in pigs, the animals were alert, and moved and ate normally with the device in place. Although the robot could not proceed to human testing because the costs of commercializing the invention were too great for such a small number of cases, the work hints at the extraordinary feats of engineering now possible in medical robotics—from improving surgical technique to improving patients’ daily lives.

Making “Curvy Robots” Straightforward

Roboticists are at heart inventors, often working at the edge of what is possible. “I don’t want to do something that other people are already doing,” says Dupont. “I want to do something that is far out there.” On the other hand, he says, “I came to the hospital because the clinicians were right there to tell me, ‘Pierre, that’s a stupid idea.’ And so, it gave me an opportunity to focus my work to ensure clinical relevance.”

Dupont is an expert guide to medical robotics: he and colleagues recently published in Science an overview of the field that documented the proliferation of papers on the subject, from fewer than 10 in 1990 to more than 5,200 in 2020. Eighty percent of the research has been published in the last 10 years, driven largely by healthcare systems’ acceptance of robots, and investment in their development. But the field is still nascent, and many roboticists, says Dupont, tend to invent devices that are elegant from an engineering perspective but aren’t necessarily more practical than existing technologies.

Citing a personal example, his research team developed an elaborate method for engineering the electromagnetic fields of a magnetic resonance imaging machine (MRI) in order to propel semi-autonomous robots through the human body. A proof-of-principle experiment showed that the magnetic field could even accelerate tiny “millibots”—made of steel alloy—fast enough to punch through arterial plaque blockages (residual debris would be captured by pre-placed filters, currently in trials). This ability to penetrate soft tissue using electromagnetism could someday prove useful in hard-to-reach places. For example, the device could reestablish the flow of cerebrospinal fluids in the brains of patients with hydrocephalus, a condition in which blockages lead to potentially fatal pressure buildup. (But entrenched and effective treatments—such as balloon angioplasty for widening arteries—are a formidable barrier to the perfection of new methods).

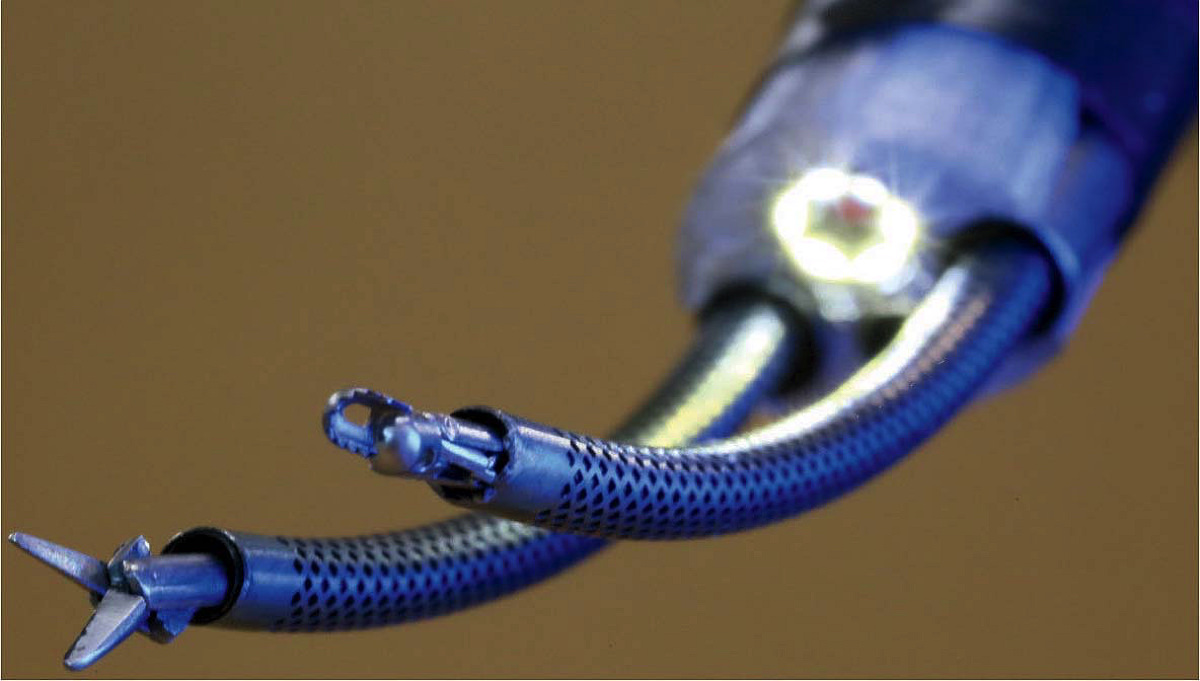

The best-developed of Dupont’s inventions are catheter-like “continuum robots” for navigating the unique curves and shapes of branching biological structures. “The traditional industrial robot was something that looked like a human arm and had discrete joints,” he explains. “If you want to wind your way into the body, discrete, jointed, rigid link designs don’t work.” Continuum robots can instead bend in any direction and behave as though they have an infinite number of joints. Dupont has developed one based on concentric tubes, like those of a telescoping radio antenna. Combining lengths of pre-curved, flexible metallic tubing, the tip of this robot can be precisely positioned by rotating the concentric rings of tubes at their bases. Their inherent curves interact elastically to position the tip and also control the shape of the robot along its entire length. The applied mathematics he learned during a postdoctoral fellowship in engineering at Harvard, he says, has “made modeling these curvy robots much more straightforward,” so that they can be precisely engineered and controlled.

Curved concentric tubes (within perforated sheaths) enable surgeons to precisely position the tools at the tips of this two-armed endoscopic neurosurgical robot.

Photograph courtesy of Pierre Dupont

Dupont developed his first concentric tube robot while trying to solve a problem in fetal cardiology. Most recently, he has parlayed this expertise into the continuing development of a two-armed robot for performing endoscopic neurosurgery through a single small hole in the skull. “Neurosurgeons told me, ‘Look, there is only so much I can do with one tool. I want two arms as if I’m doing regular open surgery.’” With funding from the National Institutes of Health, he has developed a system with arms that can be moved and controlled from interfaces attached to the fingers. On a screen, the surgeon is able to see what is happening at the tip of the robotic instruments. “It’s an exquisite system,” he says, that could be used in as many as 20,000 brain tumor surgeries a year. And it has the potential to be useful in more common surgical procedures, too.

“During surgery, there is going to be blood, there is going to be smoke, there are going to be lighting issues….You have to improve the vision of the surgeon."

Surgical Robots That “See” and “Feel”

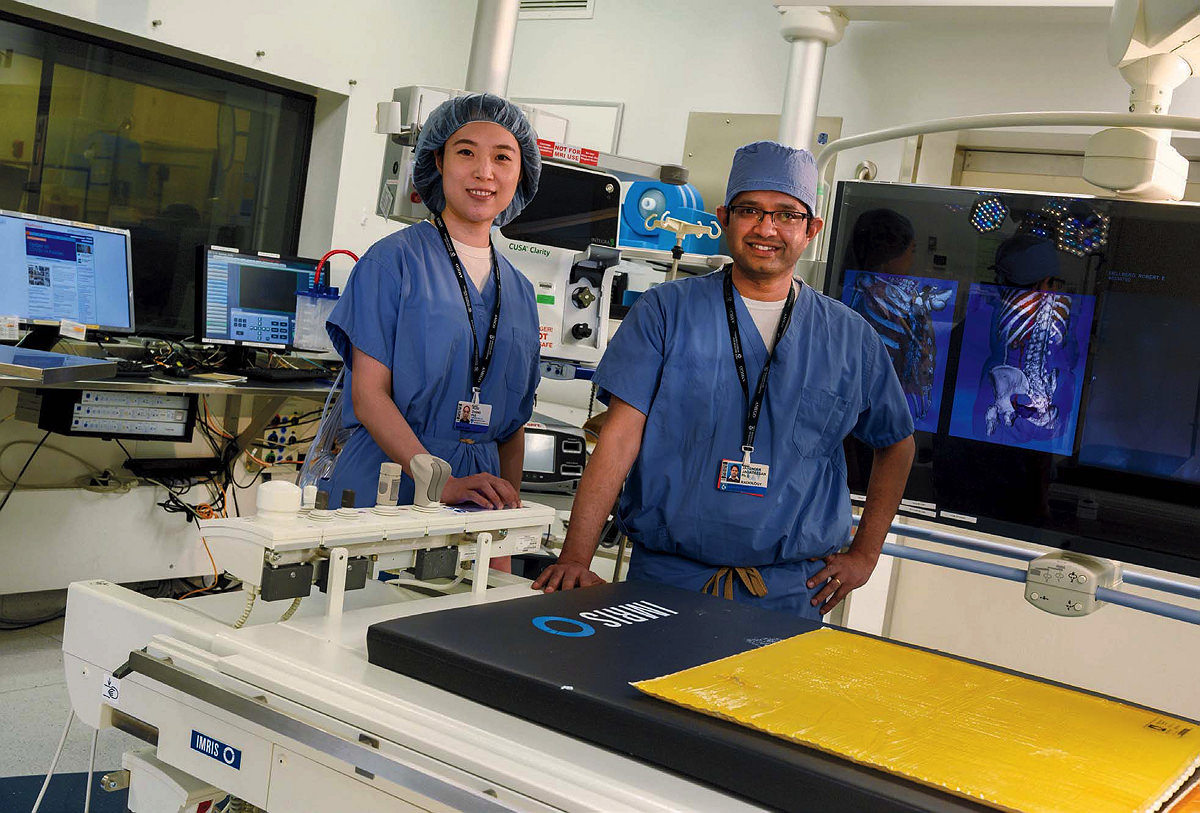

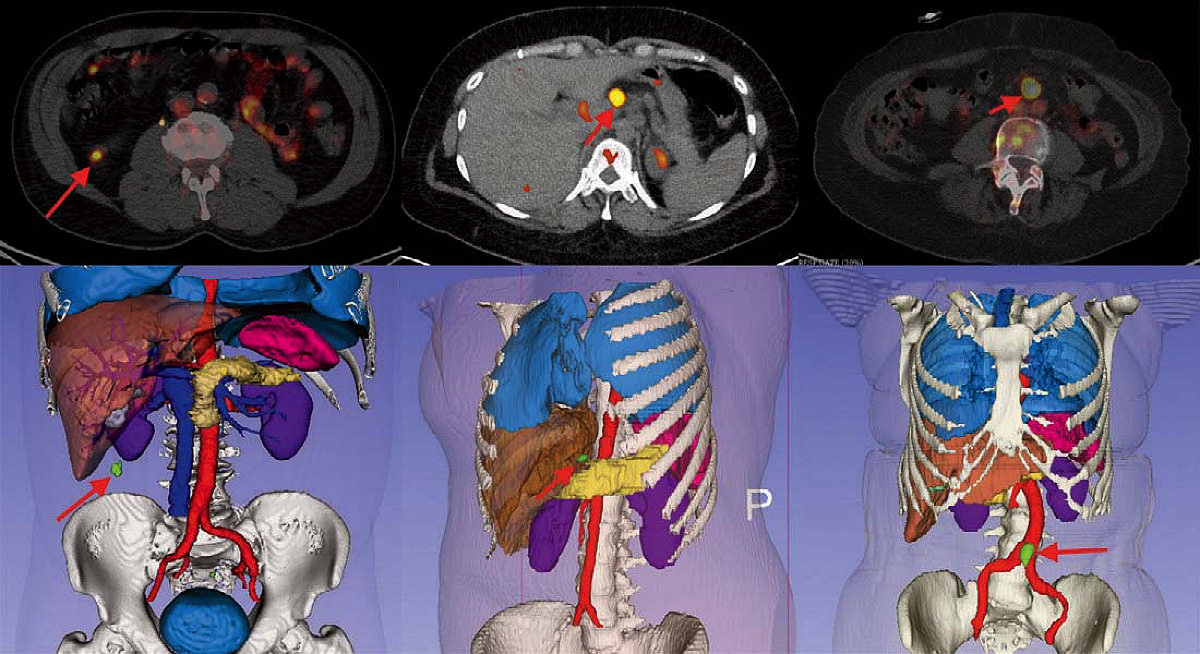

Concentric tube robots are ideal for minimally invasive procedures, which typically lead to faster patient recovery than open surgeries. But because they are performed through tiny incisions, surgeons also need better tools to see and perhaps feel what they are doing. At nearby Brigham and Women’s Hospital, associate professor of radiology Jayender Jagadeesan is undertaking such work. “Robots enhance dexterity,” he says. “But that is not enough. During surgery, there is going to be blood, there is going to be smoke, there are going to be lighting issues, and key anatomical structures are often hidden behind the exposed tissue. You also have to improve the vision of the surgeon.” Jagadeesan has been developing robotic surgical systems that can integrate a variety of imaging technologies, from stereo laparoscopy, to MRIs, to CT scans, to ultrasounds, to create a three-dimensional, real-time display that clearly shows what the robot is doing inside a patient.

Jayender Jagadeesan (right) develops three-dimensional imaging systems for integration into robotic surgeries. Postdoctoral fellow Ruisi Zhang works on haptic feedback for surgical controls to convey the illusion of touch.

Photograph by Jim Harrsion; graphic courtesy of Jayender Jagadeesan

He has also developed a way to indicate the location of subsurface tumors, by using a visual overlay to pinpoint their position. Typically, surgeons locate subsurface tumors during open surgery by palpating the tissue with their fingers. “That’s a very crude way of doing it,” says Jagadeesan, a hit-or-miss method that leaves doctors guessing at tumor boundaries. If during subsequent analysis of the excised mass, a pathologist finds that too little of the surrounding tissue (an “insufficient margin”) has been removed, potentially leaving cancerous cells behind, a second surgery must be performed. Because Jagadeesan’s system shows the shape and location of tumors in 3 dimensions, it helps surgeons remove a sufficient amount of the surrounding tissue the first time.

Jagadeesan’s navigation system has been used for a number of surgical procedures, including those that involve removal of malignant lymph nodes, renal cancer, and parathyroid and thyroid tumors. And it could prove especially useful for operations to remove stage one lung cancers. At the moment, the standard care for such tumors involves removal of an entire lobe of the lung, which permanently reduces the patient’s respiratory capacity. But “with real-time margin assessment in the operating room,” says Jagadeesan, surgeons can determine the location of tumors and the necessary margins even before they begin cutting tissue, removing only what is necessary. This application of the system is currently in clinical trials.

One of Jagadeesan’s postdoctoral students, Ruisi Zhang, is working on a further enhancement for surgical robots: haptic feedback that could provide surgeons the sensation of touch. To effect this, she says, the “first challenge is to record the texture and hardness” of real-world objects. How much resistance does a surgical instrument meet when it encounters soft tissue? And how can that resistance be rendered as a sensation at the surgeon’s point of contact with a robot, such as a joystick handled through a surgical latex glove?

Zhang notes that humans can’t determine direction from high-frequency vibrational signals (the way some animals can) but they can sense direction through the application of force. That’s useful, she continues, because it means the two types of signals—vibration and force—are separate. Vibrations can be used to convey texture, while low-frequency force signals can convey tactile sensations such as hardness, slipperiness, and the overall shape of an object. Each surgeon’s sensitivity to such feedback differs, and can change with age or even the thickness of the surgical glove she is wearing on a particular day. Males and females, Zhang says, also have different sensitivity to touch. That means the point of interaction with any robot might need to be tunable, but also that the feedback it provides should be engineered to be as broadly useful as possible.

Fundamentally, Zhang emphasizes, haptic feedback is the art of illusion. She can’t recreate every sensation that would be apparent in an actual surgery, because not all of it can be translated. But she hopes that what she and other researchers develop will be applicable to different types of tissues, organs, and surgeons, so that it can be widely deployed in robot-assisted surgeries.

“Fancy Pants”: Robots Built for Patients

Bryant Butler was a 33-year-old attorney working long hours at a law firm in the Washington, D.C., area when a stroke altered his life forever. After a root canal for an abcessed tooth, he developed what his physician diagnosed as a sinus infection, and was treated with antibiotics multiple times for four and half months. But the infection in his tooth had spread silently to the surface of one of his heart valves, forming a bacterial growth. When pieces of that growth broke off, the toxic fragments spilled into his bloodstream and reached his brain, causing numerous strokes that left Butler partially paralyzed on one side. He couldn’t walk, talk, or think clearly. That was 16 years ago.

After a year of rehab and speech therapy, Butler returned to work part-time at his firm. In July, 2005, before his stroke, he had billed 195 hours. Two years later, he was billing just 36 hours a month. Eventually, the firm asked him to resign, he says. “They needed someone to be using that office full-time.” Looking back at the arc of events, he adds, his resignation was an important step on the path to accepting that his life had irrevocably changed.

Today, Butler is a participant in trials to evaluate soft, wearable robots—the creations of Maeder professor of engineering and applied sciences Conor Walsh—that help stroke patients and others walk again.

Bryant Butler dons an ankle robot that helps him walk, after a stroke compromised his mobility.

Photograph by Jim Harrsion

“Conor,” says Lawrence professor of engineering Robert Howe, “is remarkable. He invented the whole field of soft exosuits. Previously, people were building these big, rigid, clunky things, which you know, are great if you’re trying to make a paraplegic walk again. But for the elderly, or post-stroke patients, where you want to give some assistance, they’re not going to drag around 50 pounds of robot.” Instead of creating heavy “Iron Man”-like exoskeletons, Walsh’s approach has been to use small motors and cables attached at the hip or below the knee to apply just the right amount of force at the right time, thereby lowering the energy costs of movement or correcting gait deficits caused by injury. The field has advanced rapidly. Walsh has expanded his work into pneumatic assistive devices for the upper body, and some of the technology is already available commercially. Adds Dupont, “He has been fantastically successful in this area, and is the best representative of the entire subfield of assistive and rehabilitative robotics.”

Walsh came to the United States from a rural area in the foothills outside Dublin, the grandson of stonecutters on both his parents’ sides, in order to pursue graduate studies at MIT. He first worked on robotic exoskeletons there, testing the devices, and learning about the intersection of biomechanics and the engineering of human augmentation technologies. Then, for his doctoral work, he shifted to medical devices that could be used in surgery. But when hired as an assistant professor at Harvard in the School of Engineering and Applied Sciences (SEAS) in 2012, he became fascinated by the work of colleagues who were developing soft robots: small devices for gripping, crawling, and swimming.

Conor Walsh has developed wearable robots that help people whose gait is impaired.

Photograph by Jim Harrsion

“I’d never thought about soft robotics before,” he recalls. “And then I started to make the connection between what was possible with soft robotics and wearable robotics,” revisiting the ideas behind his prior work but “looking at it from a fresh perspective.”

Initially, Walsh was interested in enhanced human performance. His first soft, wearable robot, developed with funding from the Defense Advanced Research Projects Agency, was designed to help soldiers travel farther and under heavier loads than they could naturally. In his Biodesign lab in the new science and engineering complex in Allston, evidence of this first project abounds. The prototype, bristling with wires and dubbed “fancy pants,” is positioned near the lab entrance. Beyond are large tables with bolts of cloth stowed in cubbies below, and in an adjacent room, neatly lining rows of shelves, are the special boots from that first experiment: attachment points are for cables that tighten at the heel to push the toe down as the user pushes off the ball of the foot, then shorten in front to lift the toe as the foot swings through for the next step.

Once Walsh had developed the engineering to provide walking assistance to healthy individuals using these ankle robots, he began translating the device to help people whose gait is impaired.

A motion-capture lab on the lower level of the building—a University core facility for measuring human locomotion—enables this kind of analysis. Plates in the floor can measure forces generated when walking, while mask-equipped treadmills and stationary cycles can measure oxygen consumption, a proxy for energy expenditure. An oval track that traces the perimeter of this long rectangular gym-like space is mirrored on the ceiling, where harnesses can be attached to ensure that study participants—stroke patients, people with Parkinson’s disease, or who have suffered spinal-cord injuries—can safely test Walsh’s robots. Rewalk Robotics licensed the ankle robot technology from Harvard in 2016.

Walsh also works with patients through longstanding collaborations with rehabilitation scientists at Massachusetts General Hospital (MGH) and at Boston University—people like Lou Awad, a specialist in biomechanics and rehabilitative physical therapy whom Walsh met when both were working on robotics at Harvard’s Wyss Institute. These collaborations are critical, Walsh says, because his work is driven not by applied mathematics, but by data: “Every research project that we share involves three to five people who define the goals of the study, plan study visits, bring in participants, test technologies, collect data, and then try to analyze and interpret that data, to understand if what we are doing is helping people or not.” Says Walsh, “I’m learning from that, and iterating continuously.”

Recently, his group made an exciting breakthrough with a Parkinson’s patient. A subset of individuals with the disease freeze suddenly when walking, and can’t easily start walking again. Wearing a different kind of exosuit, this one for helping the thigh swing forward, Walsh’s team has found that an individual with this problem can walk normally, no longer freezing in mid-gait.

The next step for his soft exosuits involves greater personalization and the ability to use the devices with less supervision. Bryant Butler recently tested the ankle version of the exosuit at home for a month, and found that it aided his walking, and also provided a therapeutic benefit: improving his gait even in the moments when he wasn’t wearing it.

Says Walsh, “The field is evolving toward assessment,” the continuous monitoring of users. “We need practical ways to sense how people are moving in the real world.” As a first step in that direction, described in a recent research paper, Walsh teamed up with Howe, and postdoctoral fellow Richard Nuckols to use Howe’s high-speed ultrasound imaging technology (see sidebar below) to analyze an exosuit user’s muscle dynamics in real time, enabling nearly instantaneous calibration of the robot to the individual, and to that person’s specific activity at any given moment.

“How can we track improvement if a person is going through rehab, or monitor degradation in performance if a person has, for example, a neurodegenerative disease,” asks Walsh, “which will cause them to get worse over time? Or maybe they have begun a new medication and we want to know if it is helping. At the moment, we just don’t have the tools that allow us to do that.”

This type of feedback would be especially useful for amyotrophic lateral sclerosis (ALS) patients, whose disease (commonly known as Lou Gehrig’s disease) is progressive. Pneumatic robots that Walsh has engineered to support the shoulder or exercise the hand aid in upper-body movements. Assistant professor of physical medicine and rehabilitation Sabrina Paganoni, a clinical collaborator and physician investigator at MGH’s Healey Center for ALS, says these robots are ideal because they are modular. “You could start with one,” she says, “and then add the other,” depending on the pattern of disease progression. Drugs for treating ALS are just over the horizon, she reports, which will only increase this patient population, as more of them live but require assistance. And a collaboration with senior lecturer on neurology Leigh Hochberg, the MGH-based inventor of Braingate (an implantable neuroprosthetic that translates the intention of the user into computer commands), may make it possible someday for these patients to move their arms and hands using only brainwave signals that could direct the action of Walsh’s robots.

Butler, who hasn’t been able to fully sense the position of his foot since the stroke, twisted his ankle 10 years ago. Now, when he walks, he must focus to avoid reinjuring it. Wearing the robot, he says, makes walking “much less of a cognitively demanding task, by showing me how it is supposed to feel.” He doesn’t have a car, so does all his errands on foot. “I didn’t realize how draining” the act of simply walking was, he says, “until after I had used the device for eight weeks, and then stopped using it. I wish I could use it every day. It gives me a sense of safety and confidence while walking that I haven’t felt in a long time.”