Click, click, click. Sharp staccato reports, like firecrackers, or a spitting log as it burns, end in a crescendo of sound resembling a rapidly spinning cog rattle, followed by a final extra click. “Dive,” one sperm whale is saying to another. This is the first word in the language of sperm whales that humans have learned. These creatures, the largest-brained animals on Earth, communicate in codas: patterns of loud clicks. The Cetacean Translation Initiative (Project CETI) is an ambitious effort to decipher these vocalizations, an undertaking that involves cryptographers, linguists, marine biologists, and experts in artificial intelligence and robotics. If they can gather enough data, these scientists say, they should be able to communicate for the first time with another species.

The idea that humans might learn to speak whale sprang from a chance encounter at the Radcliffe Institute for Advanced Study seven years ago. During an institute fellowship in 2017, marine biologist David Gruber, a professor at the City University of New York, was listening to sperm whale codas in his office. He had come to study jellyfish, primarily “to be with Rob [Wood],” a professor in the School of Engineering and Applied Sciences who was developing soft robots, some with linguini-like fingers, that could handle the delicate jellies without harm. But that day, in an office across the hall, the sperm whale clicks sounded to MIT cryptographer Shafi Goldwasser, also an institute fellow, a bit like Morse code. Intrigued, she stopped in and suggested that advanced techniques in machine learning might enable scientists to decode the clicks. Gruber was captivated by the idea, and by the possibility of uniting researchers from diverse disciplines, and people everywhere, around a goal that would employ advanced technologies—at risk of being exploited for their destructive potential—to instead promote human compassion and empathy for another intelligent species.

Goldwasser introduced Gruber to Michael Bronstein, yet another Radcliffe Fellow, now the DeepMind professor of artificial intelligence at the University of Oxford. Bronstein explained how deep learning technologies were being used to create early large language models (LLMs) like today’s ChatGPT that are adept at understanding, translating, and generating texts. But the key to the success of such systems is access to vast quantities of training data. For this to work with sperm whale communication, titanic quantities of audio recordings would be needed.

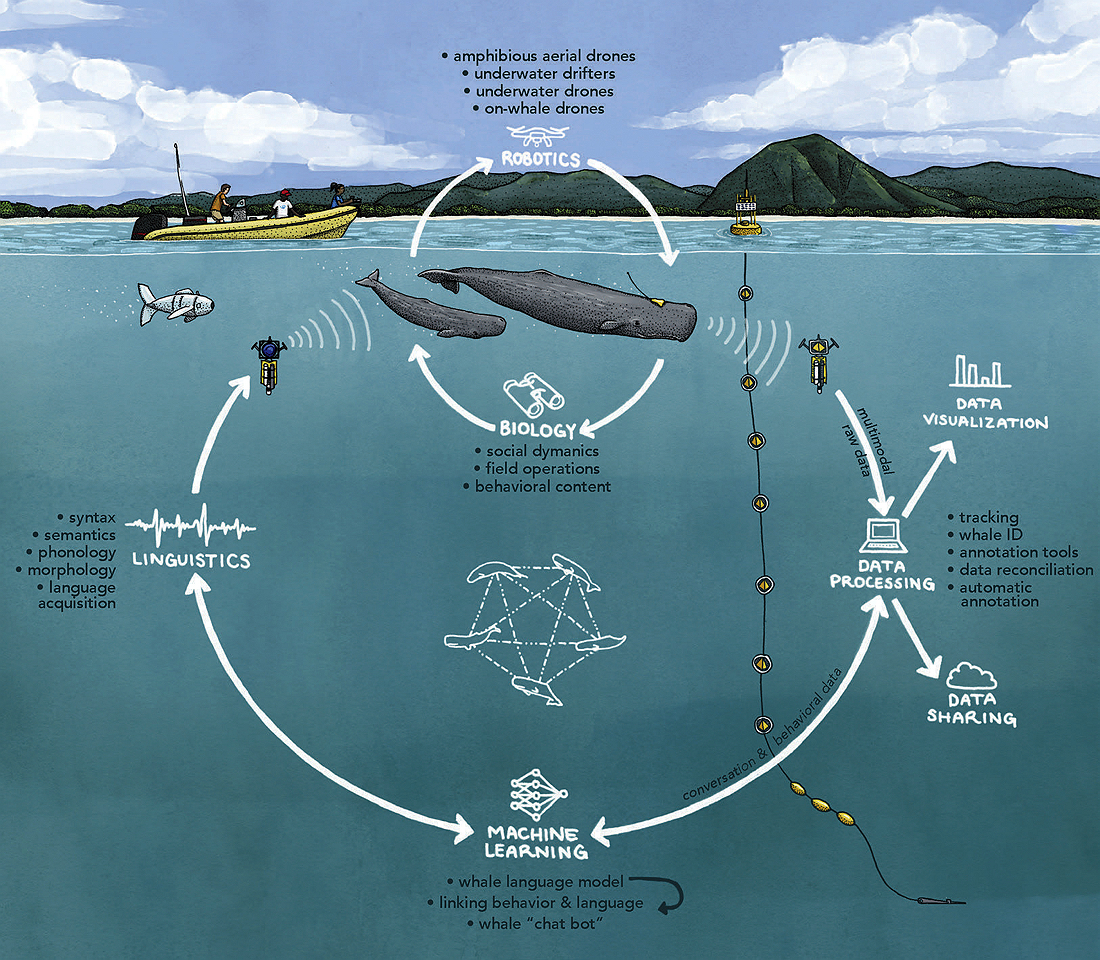

In 2019, Gruber, Bronstein, and Goldwasser returned to Cambridge for a two-day Radcliffe-funded exploratory seminar that attracted biologists, roboticists, linguists, and machine learning experts from around the world. Could the dream of understanding whale communication really work? By the end of the second day, a consensus had formed: it was possible. Project CETI, a nonprofit established in New York City with a field research station in Dominica, was formed the following year with five years of funding from the TED Audacious Project. More than 15 institutional partners, including Harvard, are involved in the organization’s efforts to listen to and translate sperm whale communication.

Engineering for Extremes

Before 1957, no one knew that sperm whales vocalize—surprising given how intensely the species (Physeter macrocephalus) was hunted for its oil in the nineteenth century, and doubly so given that its clicks can exceed 200 decibels, far louder than a rock concert or an airplane engine during takeoff. Sperm whales live about 70 years, congregate in matrilineal family groups, and use their powerful acoustical capabilities to communicate with other members of their pod—and to echolocate squid, a favorite food. Off the coast of Dominica, part of the Windward Islands chain in the Caribbean Sea, biologist Shane Gero has been studying these toothed cetaceans on a shoestring budget for 15 years. He’s made recordings of some 10,000 click vocalizations during that time. Now Project CETI’s lead field biologist, his computer science colleagues have determined that to decipher what the whales are saying, they will need on the order of 4 billion vocalizations.

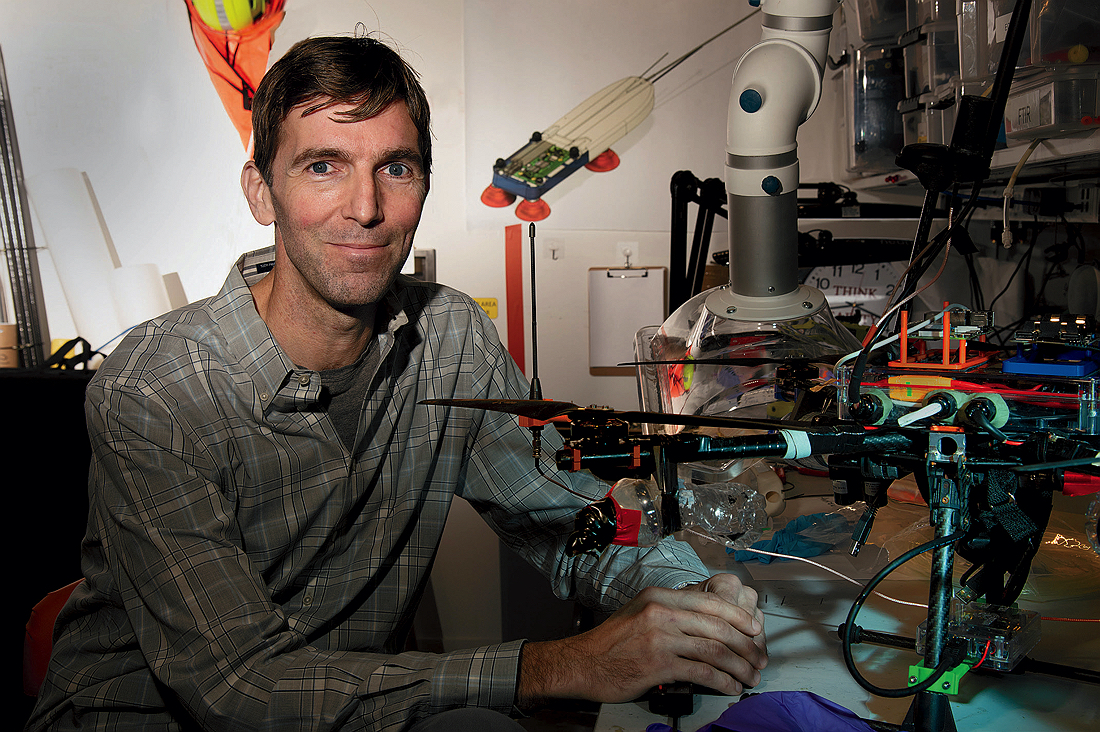

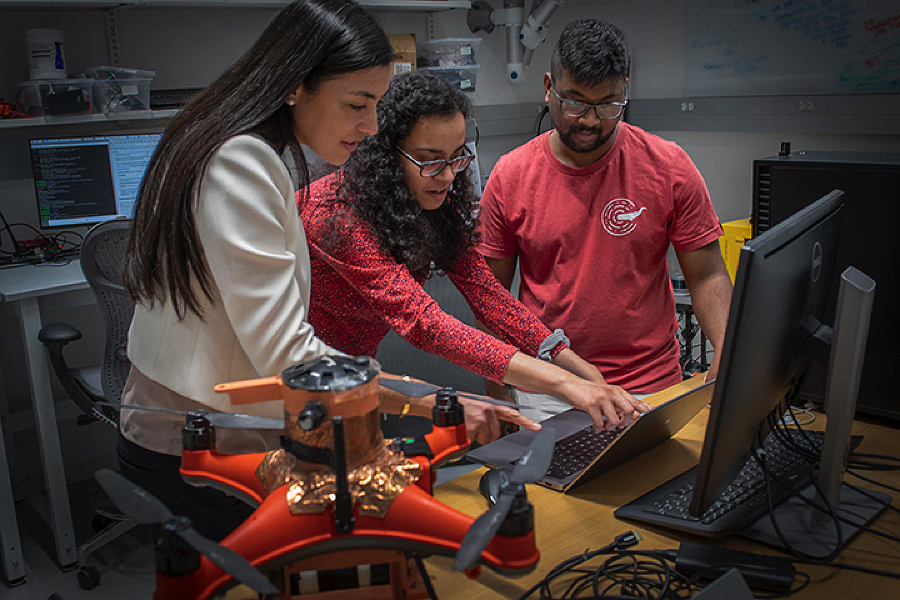

Scroll forward from that random, initial connection at Radcliffe in 2017, to a laboratory in Harvard’s science and engineering complex in Allston. There, in an effort to link audio recordings and contextual behaviors to specific whales, scientists in the Harvard microrobotics laboratory of Robert Wood, the Harry Lewis and Marlyn McGrath professor of engineering, have been developing tags that can be attached to whale skin with suction cups, and then released. The tags are packed with microelectronics embedded in pressure-tolerant epoxy, and can record sound, location, depth, light, temperature, and in some cases, video. Challenges abound in tag engineering: wet sperm whale skin appears smooth, but is rough at the micron level, and it sloughs off 300 times faster than human skin. The speeds at which the whales swim—powering through saltwater at up to 30 miles per hour—create tremendous shear forces that can rip the tags free. And the depths at which the whales swim can exceed a kilometer, so the devices must be able to withstand pressures in excess of 100 atmospheres. (Read more in “Data Collection in the Deep,” page 39.)

“There’s a set of tradeoffs,” says Wood, “a fight over what’s going to last longer: the battery life, the amount of memory they have on board, or the adhesion mechanism.” Some aspects of tag development are not typical academic work, he says, but involve “a lot of good, hardcore engineering,” for which the lab hires professional staff, external consultants expert in embedded systems, and engineering students from universities with work-study programs, such as Northeastern, MIT, and Waterloo in Canada. The up-close recordings the tags provide will be critical for annotating the audio data prior to analysis, he continues, and will help clarify which whales are vocalizing. (Often, two sperm whales in apparent conversation will talk simultaneously, as if speaking over each other. But Bronstein has speculated that, with their big brains, they might be able to speak and listen at the same time. No one knows for sure.)

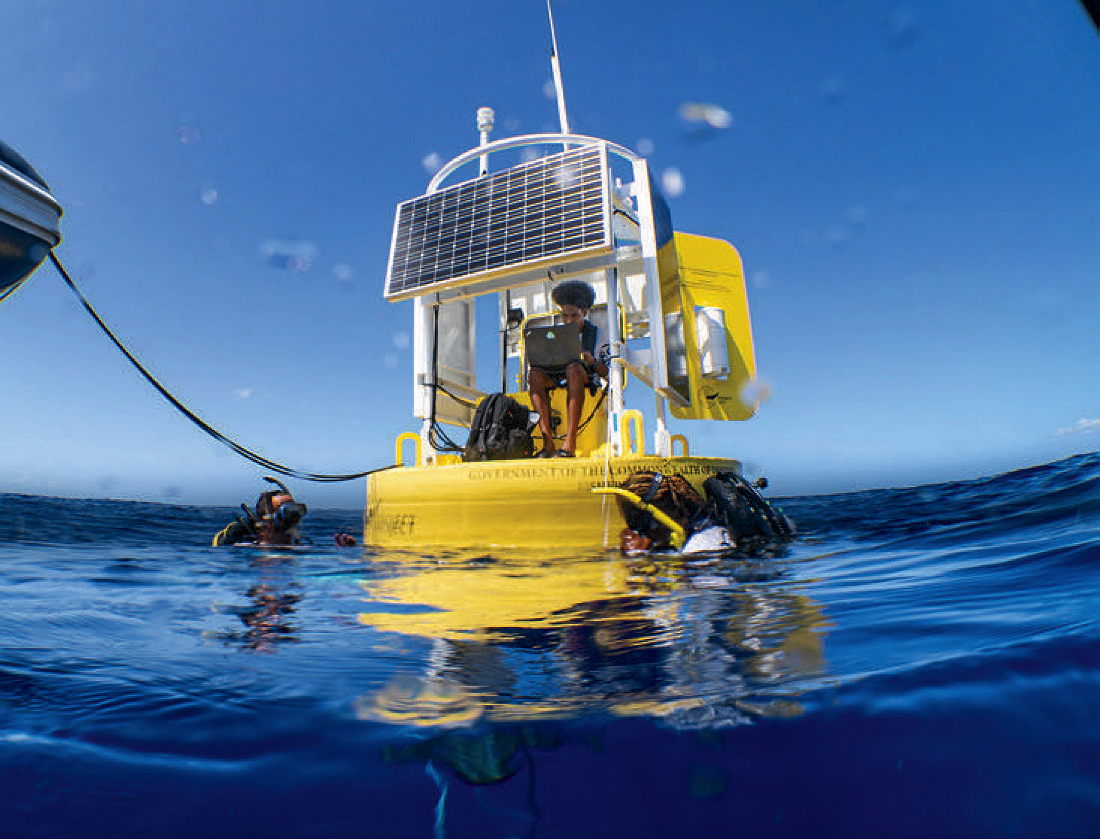

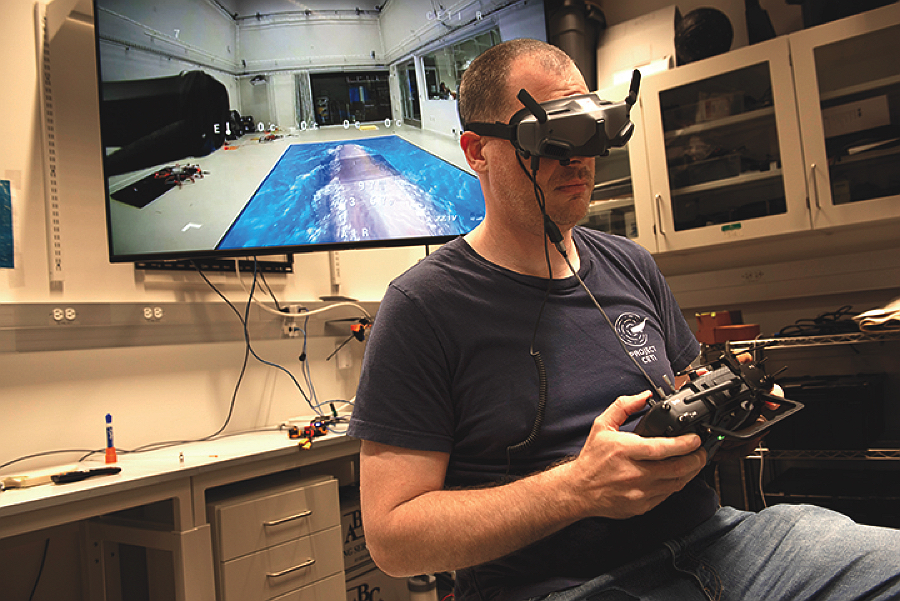

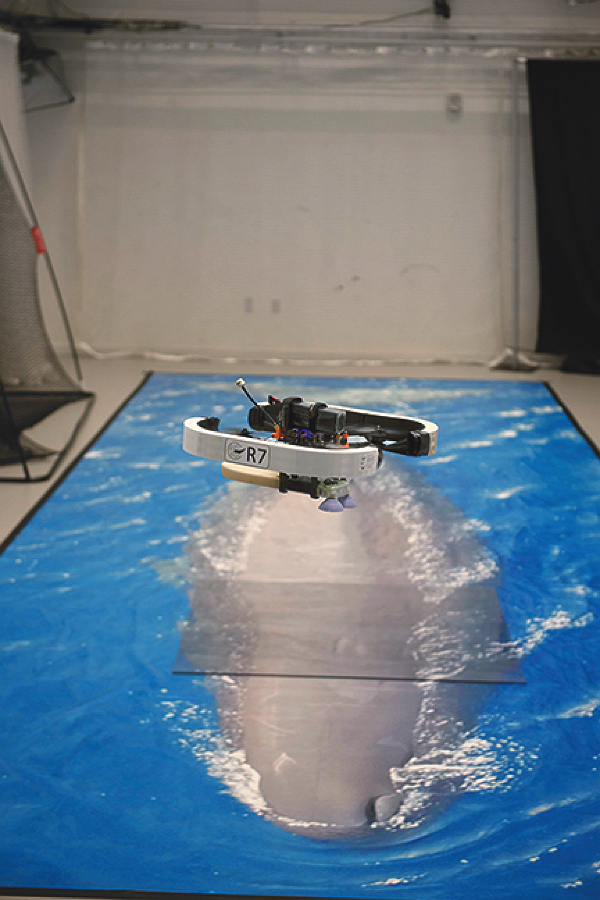

Whatever the sophistication of the recording technology, data collection—which takes place around Dominica, the only country in the world where sperm whales reside year-round—remains human-intensive. A great deal of time is spent tracking the tagged whales visually, acoustically, and with handheld directional antennas that can detect VHF pings emitted by the tags. “The whales spend most of the day underwater,” explains assistant professor of computer science Stephanie Gil, only surfacing for “about 10 minutes every hour.” Gil has therefore been working with Wood and a pair of doctoral students to develop a system that will eventually automate the process. Acoustic information gathered from fixed underwater hydrophones enables the researchers to calculate a whale’s angle of arrival, the imaginary line along which the whale is likely to surface. Sperm whales stop clicking just before they breach—and when they do, a pre-positioned autonomous drone, guided by these streams of location data and launched from a boat, could fly to meet them. Ultimately, Gil’s team hopes to build drones to affix and retrieve tags, in a framework she describes as “a self-sustaining data gathering loop.”

The Linguistic Leap

But even as the engineering team devises a strategy to dramatically scale up data collection—to feed the data-hungry machine learning system they hope could translate sperm whale vocalizations—their CETI colleagues involved in trying to understand click communication have forged ahead. Using the recordings they have so far, most of them painstakingly gathered by Gero, they have detected previously unknown complexities in the sounds generated by the whales.

In the past, sperm whale codas have been analyzed principally in terms of the number of clicks and the intervals between them. A representative discovery, for example, deemed in shorthand by the researchers as the discovery of a “whale phonetic alphabet,” as reported last September by a team at MIT, detailed detection of “fine-grained modulation of inter-click intervals relative to preceding codas,” as well as the addition of an extra click to existing codas, that changed depending on the context in which the whales were vocalizing. The researchers also documented independent changes in rhythm and tempo. When combined, these elements suggested complexity of the click communication that is an order of magnitude greater than previously suspected.

The paper generated tremendous excitement among the scientists because simultaneous work by Shafi Goldwasser had suggested that the more complex a system of animal communication, the more likely that a machine learning algorithm might succeed in translating the clicks into something humans could understand.

Then, in December, Project CETI’s linguist, Gašper Beguš, Ph.D. ’18, took the understanding of click complexity further, revealing the presence of acoustic properties in codas that are analogous to the vowels and diphthongs in human speech. Beguš, whose studies under professor of linguistics Kevin Ryan focused on animal communication, argued that the number of clicks and their timing correspond to human vowel duration and pitch, and that properties such as click timbre and harmonics correspond to the resonant frequencies formed by the human vocal tract in speech and song. These previously unobserved qualities and characteristics of click sounds, in the context of associated patterns of whale behaviors, Beguš wrote, appear not to be artifacts, but rather are under the whales’ control.

“Maybe for the first time in history, we are studying three intelligences together”: animal, human, and machine.

“Some of the insights that Gašper is adding to the project,” says Gruber, “totally eluded the machine learning teams.” (Beguš characterizes them as “good old-fashioned philology.”) Gruber recalls that the late Roger Payne ’56, a Project CETI adviser whose discovery of humpback whalesong sparked the worldwide whale conservation movement, once said, “I can’t believe I went my whole career and never worked with a linguist.”

“What is fascinating to me,” says Beguš, now assistant professor of linguistics at the University of California, Berkeley, is that “maybe for the first time in history, we are studying three intelligences together”: animal, human, and machine. Artificial intelligence doesn’t acquire language in the same way humans do, he continues, but “it has become so good that linguists have to take it seriously.” The addition of another biological intelligence is an element that has been missing, he says, “because the data are so difficult to get,” but he feels the Project CETI effort is extremely promising because of the similarities between sperm whales and humans. Young whales are raised by their mothers until they are teens and learn codas over time. “Sperm whales also have complex social structures,” he points out. A signal example occurred last summer off the coast of Dominica, when 12 genetically related adult females attended a birth observed by Project CETI scientists, vocalizing with several previously unheard codas.

This social complexity, paired with an inability to communicate by gestures, facial expressions, or other physical means, raises the odds that the communication embedded in click vocalizations is complex, Beguš believes. “In elephants, there are a million ways they could communicate,” but the lack of nonverbal communication in whales suggests that if information is being transmitted in the long dialogues that they have during socialization, it is likely to be packed into the sounds they make. At the great depths where they hunt in darkness, sound is the only means of communication. “I’ve never seen anything like that” elsewhere in the animal world, he says.

“Language is a symbolic system,” Beguš points out. “We can’t infer meaning from the sound itself.” But he has nevertheless used AI-enabled analysis of the sounds themselves to reveal how sperm whale clicks could be encoding information. He’s been working with a form of AI that acquires language differently than large language models. These systems learn by imitation, the way human babies do, rather than by replicating language based on massive quantities of training data. What is special about these “artificial baby learner language models” is that even when trained on only a few words of English, they can generate new words that were not part of their training data. Though not all the words this system comes up with are actual English words, they are plausible outputs based on the network’s analysis of patterns in human language. And they can therefore be used to identify salient features of language, including phonetics. The use of these models to study whale vocalizations rather than human language enabled his research colleagues to uncover how the codas could be encoding information: through features reminiscent of vowels and diphthongs in human speech.

The What-Ifs of “Hello”

On Friday nights when Gruber is in town, he and Wood grab dinner. The two are as superficially unlike as one can imagine: Gruber is a dreamer, and Wood is as pragmatic and straightforward as his considerable engineering prowess might suggest. “It’s not as easy as you might think for a roboticist to work with a marine biologist,” admits Gruber. But they are friends and, beyond Project CETI, continue to collaborate on soft robotics projects for handling fragile marine creatures: not only the jellyfish that initially brought him to Radcliffe but, more recently, delicate deep-sea sponges as much as 18,000 years old. On Friday night get-togethers at Charlie’s Kitchen, their conversations are freewheeling. In those moments, Wood ponders questions small and large, such as what will happen if they succeed: “If we knew what the whales were saying, what would we say?” Not only philosophically, but “even the mechanics of it, the underwater speakers: How would we place them so as not to freak them out with a disembodied voice? How do we gently say ‘Hello,’” he wonders, “in whale-ish?”